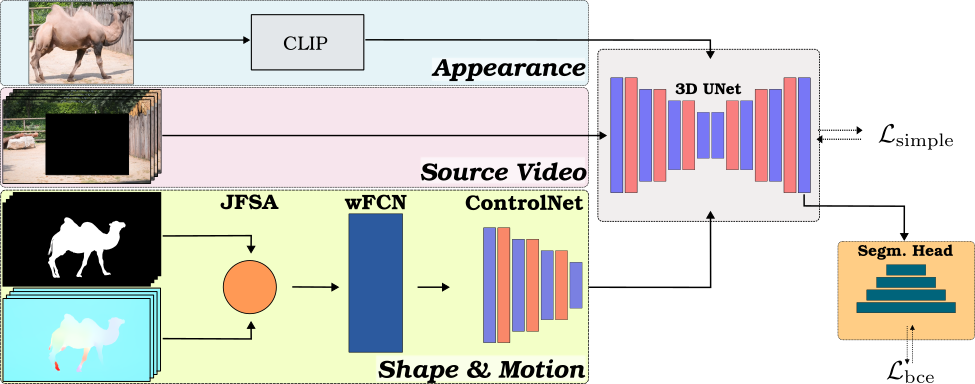

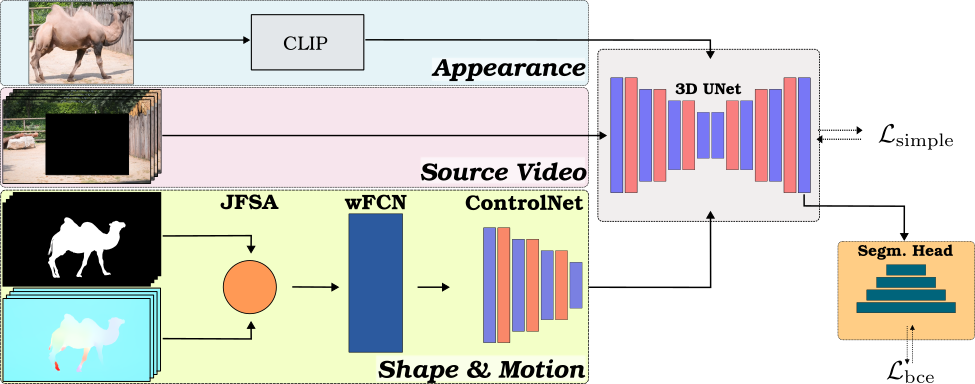

Method

The objective is to alter the structure of one object in a source video, guided by the initial edited keyframe; simultaneously, a driver image is employed to edit the appearance. Note that the edit is restricted to one specific object, and the background should remain unaffected. The shape edits is shown for each frame only for visualization purposes, in practice only the first keyframe is provided

The model is tasked with editing a target object in a source video based on the guidance from a driver image. It is important to emphasize that both the structure of the object and the background should remain unchanged.

@misc{peruzzo2024vase,

title={VASE: Object-Centric Appearance and Shape Manipulation of Real Videos},

author={Elia Peruzzo and Vidit Goel and Dejia Xu and Xingqian Xu and Yifan Jiang and Zhangyang Wang and Humphrey Shi and Nicu Sebe},

year={2024},

eprint={2401.02473},

archivePrefix={arXiv},

primaryClass={cs.CV}}

[1] Yao-Chih Lee, Ji-Ze Genevieve Jang, Yi-Ting Chen, Elizabeth Qiu, Jia-Bin Huang. Shape-aware Text-driven Layered Video Editing. CVPR, 2023.

[2] Vidit Goel, Elia Peruzzo, Yifan Jiang, Dejia Xu, Xingqian Xu, Nicu Sebe, Trevor Darrell, Zhangyang Wang, Humphrey Shi. PAIR-Diffusion: A Comprehensive Multimodal Object-Level Image Editor. arXiv preprint 2023.

[3] Fitsum Reda, Janne Kontkanen, Eric Tabellion, Deqing Sun, Caroline Pantofaru, and Brian Curless. Film: Frame interpolation for large motion. ECCV, 2022.

[4] Michal Geyer, Omer Bar-Tal, Shai Bagon, and Tali Dekel. Tokenflow: Consistent diffusion features for consistent video editing. arXiv preprint 2023.